D-BUS (Desktop Bus) is a simple inter-process communication (IPC) open-source system for software applications to communicate with one another. It replaced DCOP in KDE4 and has also been adopted by Gnome, XFCE and other desktops. It’s, in fact, the main interoperability mechanism in the “Linux desktop” world thanks to the freedesktop.org standards.

The architecture of D-Bus is pretty simple: there is a dbus-daemon server process which runs locally and acts as a “messaging broker” and applications exchange messages through the dbus-daemon.

But of course you already new that because you are supersmart developers and/or users.

D-Bus on Windows

What you may not know is how much damage is D-Bus making to open source software on Windows.

A few years ago I tried to introduce kdelibs for a large cross-platform project but I got it rejected, despite some obvious advantages, mainly due to D-Bus.

Performance and reliability back then was horrible. It works much better these days but it still scares Windows users. In fact, you may also replace “it scares Windows users” with “it scares IT departments in the enterprise world*”.

The reason?

A dozen processes randomly started, IPC with no security at all, makes difficult to upgrade/kill/know when to update applications, and many more. I’m not making this out, this has already happened to me.

* yes, I know our friends from Kolab are doing well, but how many KDE applications on desktop have you seen out of that “isolation bubble”

D-Bus on mobile

One other problem is D-Bus is not available on all platforms (Android, Symbian, iOS, etc), which makes porting KDE applications to those platforms difficult.

Sure, Android uses D-Bus internally, but that’s an implementation detail and we don’t have access to it). That means we still need a solution for platforms where you cannot run or access dbus-daemon.

Do we need a daemon?

A few months ago I was wondering: do we really need this dbus-daemon process at all?

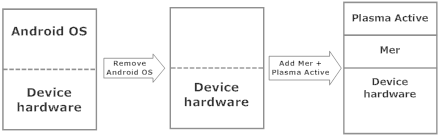

What we have now looks like this:

As you can see, D-Bus is a local IPC mechanism, i. e. it does not allow applications to communicate over the network (although technically, it would not be difficult to implement). And every operating system these days has its own IPC mechanism. Why create a new one with a new daemon? Can’t we use an existing one?

I quickly got my first answer: D-Bus was created to expose a common API (and message and data format, i. e. a common “wire protocol”) to applications, so that it’s easy to exchange information.

As for the second answer, reusing an existing one, it’s obvious we cannot: KDE applications run on a variety of operating systems, every one of them has a different “native” IPC mechanism. Unices (Linux, BSD, etc) may be quite similar, but Windows, Symbian, etc are definitely very different.

No, we don’t!

So I though let’s use some technospeak buzzword and make HR people happy! The façade pattern!

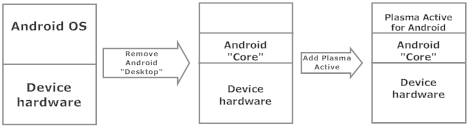

Let’s implement a libdbusfat which offers the libdbus API on one side but talks to a native IPC service on the other side. That way we could get rid of the dbus-daemon process and use the platform IPC facilities. For each platform, a different “native IPC side” would be implemented: on Windows it could be COM, on Android something else, etc

Pros

The advantage of libdbusfat would be applications would not need any change and they would still be able to use DBus, which at the moment is important for cross-desktop interoperability.

On Unix platforms, applications would link to libdbus and talk to dbus-daemon.

On Windows, Android, etc, applications would link to libdbusfat and talk to the native IPC system.

By the magic of this façade pattern, we could compile, for instance, QtDBUS so that it works exactly like it does currently but it does not require dbus-daemon on Windows. Or Symbian. Or Android.

QtMobility?

QtMobility implements a Publish/Subscribe API with a D-Bus backend but it serves a completely different purpose: it’s not available glib/gtk/EFL/etc and it’s implemented in terms of QtDBUS (which in turn uses dbus-daemon for D-Bus services on every platform).

It’s, in fact, a perfect candidate to become a user of libdbusfat.

Cons

A lot of work.

You need to cut dbus-daemon in half, establish a clear API which can be implemented in terms of each platform’s IPC, data conversion, performance, etc. Very interesting work if you’ve got the time to do it, I must say. Perfect for a Google Summer of Code, if you already know D-Bus and IPC on a couple of different-enough two platforms (Linux and Windows, or Linux and Android, or Linux and iOS, etc).

Summary

TL;DR: The idea is to be able to compile applications that require DBus without needing to change the application. This may or may not be true on Android depending on the API, but it is true for Windows.

Are you brave enough to develop libdbusfat it in a Qt or KDE GSoC?